Achieving Instant LCP on Client-Side Rendered Websites with Speed Kit

Key Takeaways

- Server-Side Rendering Layer: Speed Kit uses a Chromium-based rendering layer to create a fully rendered HTML snapshot of a client-side rendered page, which can then be cached.

- Intelligent Caching: By executing JavaScript and fetching necessary resources on the server, Speed Kit ensures that the cached version is a complete and accurate representation of the page.

- Seamless User Experience: Users receive an instant, fully-rendered page from the cache while the original interactive application rehydrates in the background, ensuring a fast initial load without sacrificing dynamic functionality.

Introduction

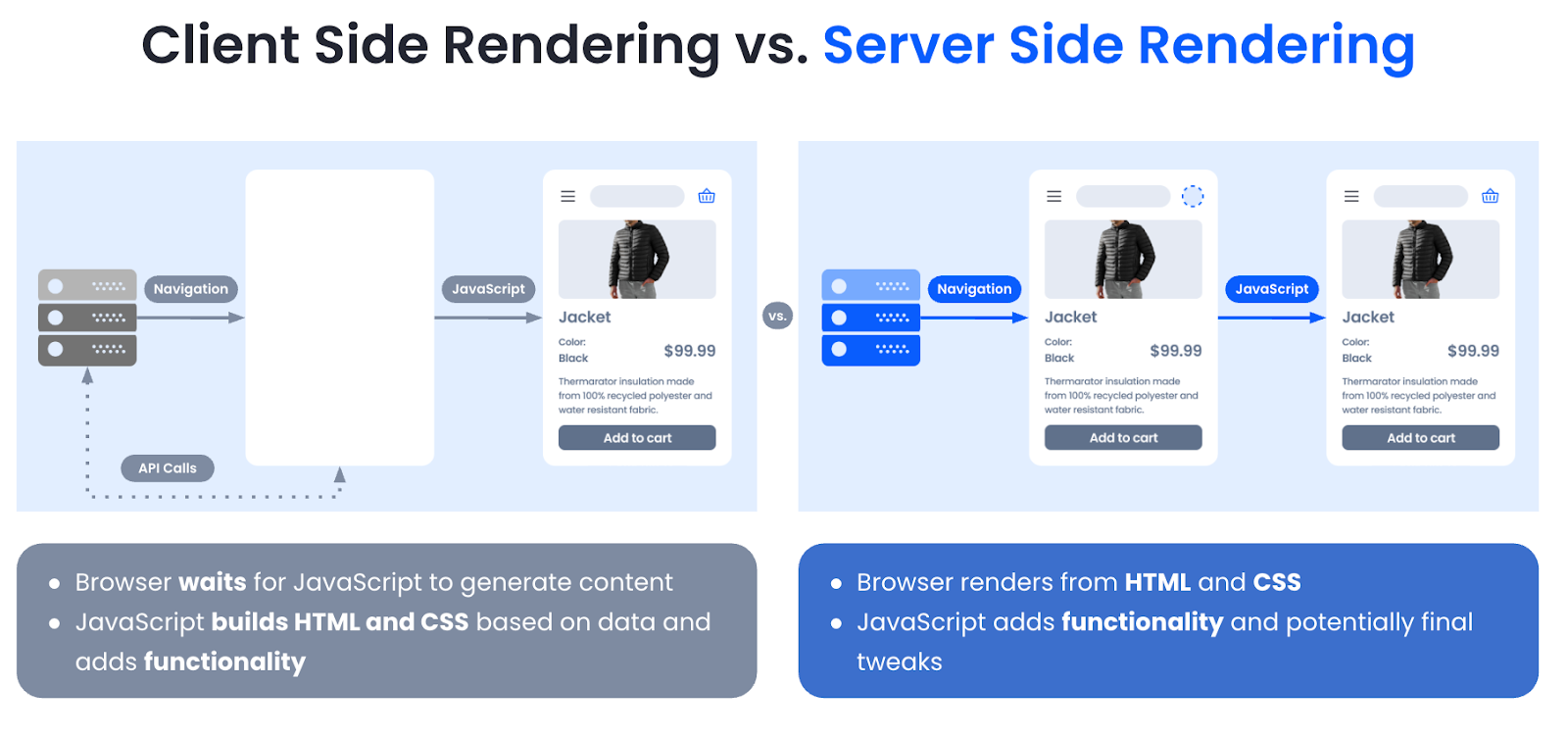

Client-Side Rendering (CSR) is a popular approach for building modern, interactive web applications. In a CSR model, the browser receives a minimal HTML document and then uses JavaScript to render the page content, which often involves fetching additional data. While this can create a fluid, app-like user experience, it often comes at a cost: initial load times can be slow, as the browser must download, parse, and execute JavaScript before the user sees any meaningful content. For e-commerce sites, this delay can lead to lost conversions and revenue.

Speed Kit addresses this challenge by introducing a server-side rendering layer that makes client-side rendered pages cacheable. By pre-rendering the page on the server, Speed Kit can deliver a fully formed HTML document to the user's browser, dramatically reducing the Time to First Byte (TTFB) and ensuring that users see content almost instantly. This document explains the architecture and processes that allow Speed Kit to accelerate even the most dynamic client-side rendered e-commerce shops.

Client-Side Rendering (CSR)

In a typical Client-Side Rendering (CSR) setup, the server sends a minimal HTML file to the browser. Crucially, this file doesn't contain the rendered HTML that allows the browser to display anything meaningful. Instead, it contains links to the necessary JavaScript files. The browser must then download and execute this JavaScript to generate the final page content, which may involve using data already embedded in the HTML or fetching it from an API. While the goal of offloading rendering to the client is a rich, app-like user experience, this approach introduces significant performance and reliability issues.

Performance and User Experience

This approach presents significant performance challenges and is inherently less resilient than server-side rendering (SSR). The user often sees a blank screen or a loading animation until the browser downloads, parses, and executes large JavaScript bundles, leading to a poor Largest Contentful Paint (LCP). Unlike with SSR, the rendering environment is entirely out of the development team's control; it depends on the user's device, browser, and even battery status, making it difficult to test and predict the actual user experience. On less powerful mobile devices, this client-side processing can be slow, and other JavaScript tasks can block the main thread, further delaying rendering. This heavy reliance on client-side execution also means that CSR is vulnerable to JavaScript errors, which can prevent the page from displaying correctly or at all.

This delay is compounded by how browsers handle different resources. While traditional HTML can be streamed, parsed, and even begin to be rendered and painted as it downloads—allowing the browser's preload scanner to discover and fetch critical sub-resources like Javascript, CSS and images early—this mechanism is ineffective with CSR. The initial HTML contains no meaningful content, so the browser must wait for JavaScript to execute before it can discover the actual content of the page, creating a significant bottleneck.

The SEO Challenges of Client-Side Rendering

Client-Side Rendering (CSR) creates significant technical hurdles for search engine crawlers, which can directly impact search rankings and visibility. The primary challenges stem from how content is delivered and rendered.

- Empty Initial HTML: The server first delivers an HTML file that is essentially an empty shell. It lacks any meaningful, indexable content and instead contains links to JavaScript files that must be executed to build the page.

- Intensive Crawler Rendering: This forces search engines like Googlebot into a resource-intensive, two-step process. The crawler cannot simply index the HTML; it must download, parse, and execute the JavaScript to see the final content. This requires significant computational resources from the crawler.

- Crawl Budget Depletion: Every website is allocated a finite "crawl budget" by search engines. The resource-intensive nature of rendering JavaScript can quickly exhaust this budget, especially for large e-commerce sites. As a result, crawlers may not have the capacity to discover and index all pages on the site.

- Risk of Rendering Errors: The entire indexing process hinges on the flawless execution of JavaScript. If there are any code errors or incompatibilities with the crawler's rendering engine, the content may fail to appear, leaving the page effectively blank and invisible to the search engine.

These issues stand in stark contrast to server-rendered pages, where crawlers receive a complete and immediately indexable HTML document, ensuring a much more efficient and reliable indexing process.

Server-Side Rendering (SSR)

With Server-Side Rendering, the server generates the full HTML for a page in response to a user's request. The browser receives a complete HTML document and can start rendering it immediately.

Performance Characteristics

The primary characteristic of SSR is its positive impact on the initial page load. Because the browser receives a content-rich HTML document, it can start displaying pixels immediately, resulting in an excellent LCP. This method is also highly effective for SEO as crawlers can easily index the content. The trade-off is a potentially higher Time to First Byte (TTFB) and increased server load. However, this server-centric approach gives the development team full control over the rendering environment; if performance issues arise, they can be addressed by scaling the server infrastructure.

Navigational Experience

While the initial load is fast, subsequent page navigations in a traditional SSR setup require a full page reload. This can feel slower than the instant transitions of an SPA, but modern browser features like the Speculation Rules API can mitigate this by pre-rendering pages to make navigations feel instant.

How Speed Kit Accelerates Client-Side Rendered Pages

Speed Kit combines the best of both worlds by adding a server-side rendering layer to your client-side rendered website. This allows us to cache the fully rendered HTML, even if your origin server only provides a minimal HTML shell.

Backend Pre-Rendering Process

Our backend system utilizes a headless browser to perform the rendering process on the server side, just as a client's browser would.

- Execution: The headless browser loads your page and executes all necessary JavaScript to fetch data and build the Document Object Model (DOM).

- Snapshot: We intelligently wait until the page is fully constructed, using configurable "guards" (specific page elements) to determine when rendering is complete, and then take a snapshot of the fully-rendered HTML.

- Caching: This HTML snapshot is captured and stored in Speed Kit's multi-layered cache. Read our Dynamic Caching documentation for more information.

Instant Previews with the Shadow DOM

When a user visits a page, the cached HTML snapshot is delivered instantly and displayed within a Shadow DOM, a browser feature that isolates this content from the main page.

- Immediate Display: Displaying the cached HTML within a Shadow DOM dramatically improves the Largest Contentful Paint (LCP) because the user sees a complete page immediately.

- Seamless Hydration: While the user views this static preview, your JavaScript application loads in the background to become interactive, a process known as "hydration."

- Live Transition: Speed Kit uses the same configurable 'guards' to detect when the JavaScript application is fully hydrated. At that moment, the Shadow DOM is seamlessly removed, transitioning the user to the live application. This transition time is closely monitored to ensure a high-quality user experience.

This approach combines the instant load times of a static site with the rich interactivity of a modern frontend application while guaranteeing that the user always sees the correct, up-to-date content from your origin at the end of the interaction.

Change Detection for CSR Pages

Speed Kit’s crowd-sourced change detection works seamlessly with client-side rendered pages. The core principle remains the same: using real-time user traffic to identify stale content. For a detailed explanation of the underlying mechanisms, please see the Dynamic Caching documentation.

- Content Comparison: After the live application has fully hydrated, our script compares key elements of the content rendered by the frontend application against the static HTML that was initially served from the cache.

- Triggering Updates: If a discrepancy is found (e.g., a price or stock level has changed), the browser sends a signal to our backend. This triggers an immediate revalidation and refresh of that page across our entire infrastructure, ensuring all subsequent visitors receive the fresh version.