High-Performance A/B Testing With Speed Kit

Key Takeaways

- The Performance Conflict: Client-side A/B testing tools, while essential for optimization, often hide the entire page body for several seconds to prevent content "flicker," a practice that severely damages Core Web Vitals (CWV).

- The Speed Kit Solution: Speed Kit mitigates these negative effects by fundamentally accelerating the underlying website and supporting several popular A/B testing tools with out-of-the-box integrations.

- Actionable Best Practices: To maintain optimal performance, customers should adopt a strategic approach, including limiting concurrent tests, avoiding modifications to the Largest Contentful Paint (LCP) element, and regularly auditing and removing unused testing scripts.

Introduction

A/B testing is a critical practice for any e-commerce business aiming to optimize user experience and increase conversion rates. However, there is a fundamental conflict between the goals of A/B testing and web performance.

The most common client-side testing tools work by loading JavaScript that dynamically alters a page in the user's browser, which can introduce significant delays, visual instability, and a poor user experience. This often leads to a dilemma for businesses: sacrifice site speed for conversion rate optimization, or sacrifice optimization for a better performance score.

Speed Kit is designed to resolve this conflict. By combining our core acceleration technology with intelligent integrations and clear best practices, we enable you to run effective A/B tests without compromising the speed and responsiveness of your website. This document outlines the common performance challenges posed by A/B testing tools and explains how Speed Kit provides a comprehensive solution.

Client-Side vs. Server-Side A/B Testing

Understanding why A/B testing tools can harm performance is the first step toward solving the problem. The impact varies depending on the implementation method. Client-side testing is the more common approach due to its implementation flexibility, but it is also the primary source of performance issues. Server-side testing, on the other hand, offers significant performance advantages but introduces unique complexities, particularly when used with an advanced caching system like Speed Kit.

Client-Side A/B Testing

In client-side A/B testing, a standard HTML page is sent to every user, and a JavaScript snippet from the testing tool runs in the browser to dynamically make changes.

Pros

- Ease of Use: Campaigns can often be set up and managed through a user-friendly interface without requiring backend developer support.

- Flexibility: It's easy to test changes on almost any element of an existing page.

- Speed of Deployment: New tests can typically be launched very quickly.

Common Performance Pitfalls

Client-side A/B testing tools introduce several performance bottlenecks that directly impact Core Web Vitals and the overall user experience.

The "Flicker" Effect and Anti-Flicker Scripts

Because the A/B testing script modifies the page after it has started to load, users often see the original page for a moment before it changes to the test variation. This is known as the "flicker" effect or Flash of Original Content (FOOC), which creates a jarring user experience. To prevent this, A/B testing tools employ an "anti-flicker" script. This script's sole purpose is to hide the entire page (or parts of it) until the variation is ready to be displayed. Often, these scripts have a default timeout of 3-4 seconds. If the A/B test script fails or is slow to load, the user is left staring at a blank page. This practice is devastating for the Largest Contentful Paint (LCP), making a good user experience nearly impossible to achieve.

JavaScript Payload and Execution Time

A/B testing tools rely on JavaScript, which adds to the page's weight and complexity.

- Payload Size: The testing script itself, along with the logic for all active experiments and variations, increases the amount of data the user must download. The more tests you run, the larger this payload becomes.

- Request Cascades: A/B testing is rarely a single request. Typically, an initial script loads the experiment configuration, which then triggers more requests to fetch variation-specific logic or assets. This chain of network requests adds latency.

- Main-Thread Blocking: Once downloaded, this JavaScript must be executed on the browser's main thread. This can block other critical tasks, such as rendering the page or responding to user input, leading to a poor Interaction to Next Paint (INP).

How Speed Kit Optimizes Client-Side Tests

Speed Kit's approach is twofold: we make your site so fast that the overhead of A/B testing is minimized, and we provide intelligent integrations that directly target the worst performance offenders.

Through our integrations with supported A/B testing frameworks, Speed Kit fundamentally changes when client-side tests are applied. Our dynamic fetcher can apply the test variations directly to the cached version of a page before it is even rendered. This means the browser receives the correct test variation from the very first moment, completely eliminating the cause of the "flicker" effect.

Because the flicker is prevented at its source, the harmful anti-flicker scripts become unnecessary. In most integrations, these scripts can be omitted entirely. This directly solves the devastating LCP problem caused by a hidden page and delivers a significantly better user experience.

For supported platforms detailed later in this document, we provide specific integration patterns to ensure tests work correctly. We are also continuously expanding our support for third-party tools. While we do not currently have out-of-the-box integrations for every platform, our team is committed to finding solutions. If a significant number of our customers use a specific system, we are open to developing a custom integration for optimal performance.

Server-Side A/B Testing

In a server-side setup, the origin server decides which variation a user should see and delivers the final HTML directly to the browser.

Pros

- Superior Performance: With no extra client-side JavaScript for rendering variations, this method is significantly faster and better for Core Web Vitals.

- No "Flicker" Effect: The user receives the final test variation from the start, ensuring a stable and seamless experience.

- Reliability: The test is not dependent on the user's browser or device performance.

Cons

- Implementation Complexity: Requires backend developer resources to implement and manage tests.

- Slower Deployment: The process of setting up a new test is typically more involved and time-consuming.

- Potential for Slower TTFB: Because the server must often communicate with an A/B testing service before rendering the page, this can add processing and network latency, increasing the Time To First Byte (TTFB).

- Caching Complications: Poses a direct challenge to caching systems, including backend, network, and client caches.

How Speed Kit Manages Server-Side Tests

The core challenge for Speed Kit is cache contamination. A server-side test produces multiple HTML versions for the same URL, which conflicts with a cache's goal to store and serve a single version. If Speed Kit were to cache one variation, it would incorrectly serve it to all users, breaking the test.

Speed Kit supports two effective strategies to handle this, allowing you to choose between simplicity and maximum performance.

Option 1: Strategic Blacklisting (Simple Method)

The most straightforward solution is to add the URLs of pages with active server-side tests to the blacklist in the Speed Kit Dashboard.

When a page is blacklisted, Speed Kit bypasses HTML caching for that URL, ensuring users always receive the correct test variation directly from your origin server. While this means the page's HTML is not served instantly from our cache, Speed Kit continues to accelerate all other resources on the page, such as images, CSS, and JavaScript. This creates a reliable testing environment with minimal setup. Once the test concludes, you simply remove the page from the blacklist to restore full, instant-loading HTML caching.

Option 2: Pre-Rendering All Variations (Advanced Method)

A more advanced solution that preserves full caching benefits is to have your backend render all test variations directly into the base HTML of the page. A small, lightweight inline script then runs on the client-side to select and display the correct variation for a specific user, hiding the others.

- Benefits: The major advantage of this approach is that the HTML document is identical for all users, making it fully cacheable by Speed Kit. This allows you to run server-controlled tests while still achieving an instant Time To First Byte (TTFB) and the maximum performance uplift.

- Considerations: This advanced method requires custom implementation and must be coordinated with our Product Integration Team. The initial HTML payload will be larger, as it contains the markup for all variations. Cumulative Layout Shift (CLS) is generally not an issue, as a small inline script ensures only the relevant test variation is displayed from the start.

Supported A/B Testing Tool Integrations

Speed Kit provides seamless, out-of-the-box support for several popular A/B testing platforms. These integrations are designed to ensure the testing scripts work in harmony with Speed Kit's acceleration technologies.

The Core Challenge and Speed Kit's Solution

The primary challenge is timing. A/B testing tools typically apply changes after the page has fully loaded, causing a jarring "flicker." Our tool integrations solve this by synchronizing the test logic. The test is applied first to the cached version of the page for an instant display, and then again to the live content from the origin before it gets merged. This ensures the user sees the correct test variation from the beginning.

Important Considerations for Flicker-Free Testing

This advanced, flicker-free approach has specific requirements for tests that are deployed during the initial page rendering:

- Direct Integration Required: The A/B testing script must be integrated directly into the page's HTML, not loaded asynchronously via a tag manager.

- JavaScript Dependencies: The approach may not work if the test code has dependencies on your site's main JavaScript files, as those scripts may not be available when the test is applied to the cached version.

- Avoid Startup Animations: Avoid using startup animations like fade-ins on tested elements. Because the test code is applied twice, these animations can re-run after the final content merge, creating an unwanted visual effect.

- Risk of Skewed Analytics: If your test variation includes custom tracking code (e.g., analytics beacons), be aware that this code may be executed twice. This double execution can lead to inflated tracking data, potentially skewing the results of your experiment.

These limitations do not apply to tests that are triggered by user interaction (e.g., a click) or are activated after the page has fully loaded. Similarly, tests running on pages that are not accelerated by Speed Kit (e.g., a checkout funnel) are not impacted.

For tests that have dependencies on your site's scripts, you can trigger your test code on the DOMContentLoaded event. At this point, all necessary scripts are typically available, and this timing does not interfere with Speed Kit's merge logic.

Due to these considerations and potential tool-specific limitations, the flicker-free testing approach is not active by default and requires communication with our Product Integration Team to ensure a correct setup.

Dynamic Yield

Dynamic Yield is a comprehensive personalization platform that helps businesses deliver individualized customer experiences. It offers a full suite of tools, including both client-side and server-side A/B testing, product recommendations, and targeted messaging. For more information, visit the Dynamic Yield website.

Using the Graphical Editor (Recommended)

Tests created using Dynamic Yield's graphical editor will work out-of-the-box with Speed Kit. The editor is designed to create stable tests that are fully compatible with our dynamic content merging.

Using Custom JavaScript

While it is possible to use custom JavaScript to run tests, this approach requires careful implementation to avoid issues. If you use custom code, be aware that the general limitations described in the "Important Considerations for Flicker-Free Testing" section apply.

To ensure compatibility with Speed Kit’s rendering process, avoid using campaign conditions that rely on specific browser lifecycle events or polling mechanisms.

- onDOMContentLoaded/DOMContentLoaded: These event-based triggers are incompatible because Speed Kit applies tests before the standard DOMContentLoaded event fires.

- waitForElementAsync: This method, which polls for an element to appear, can lead to unpredictable behavior due to the dynamic content merging process.

Kameleoon

Kameleoon is a comprehensive experimentation and personalization platform that offers a wide range of features, including A/B testing, feature flagging, and AI-driven personalization. It supports both client-side and server-side implementations, giving teams flexibility in how they run their tests. For more information, visit the Kameleoon website.

Using the Graphical Editor (Recommended)

Tests created using Kameleoon's graphical editor will work out-of-the-box with Speed Kit. The editor is designed to create stable tests that are fully compatible with our dynamic content merging.

Using Custom JavaScript

If you use custom JavaScript code for your tests, we recommend following Kameleoon's best practices, such as using their asynchronous activation methods like runWhenElementPresent and runWhenConditionTrue.

Unsupported Methods

Implementation methods that are not recommended by Kameleoon, such as using interval polling or MutationObserver, are only partially supported by Speed Kit. Complex, nested activation logic can also cause issues. Specifically, avoid:

- Nesting Activation Methods: Using

runWhenConditionTruewithinrunWhenElementPresentcreates complex timing dependencies that may not resolve correctly during Speed Kit's two-step application process. - Polling with setInterval: Using

setIntervalwithinrunWhenElementPresentor other activation methods is a form of polling that can interfere with the content merge and is not a recommended practice.

VWO (Visual Website Optimizer)

VWO (Visual Website Optimizer) is a leading A/B testing and conversion rate optimization platform used by businesses worldwide. While best known for its user-friendly visual editor for client-side testing, VWO also provides robust capabilities for server-side testing, making it a versatile solution for any optimization strategy. For more information, visit the VWO website.

The following options detail how to configure VWO's client-side campaigns to work flawlessly with Speed Kit.

Compatible by Default

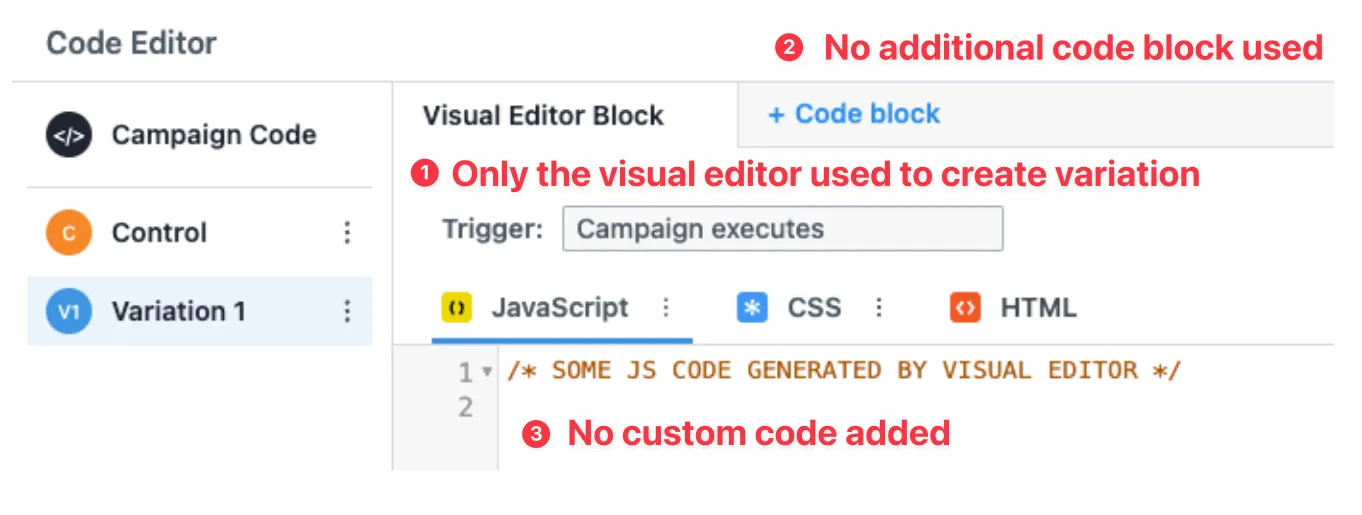

Variations only using Visual Editor Blocks

If a test variation is created via the visual editor and contains no added code blocks, it is automatically compatible with Speed Kit. No changes are required. Please note: if you use custom code in a variation created via the visual editor, you must use an additional code block and follow the guidance below.

Variations only using CSS in code blocks

If a test variation only adds custom CSS to the page but does not change DOM nodes, it is automatically compatible with Speed Kit. No changes are required. These kinds of tests are ideal because they prevent flickering very well.

Compatible with Specific Settings

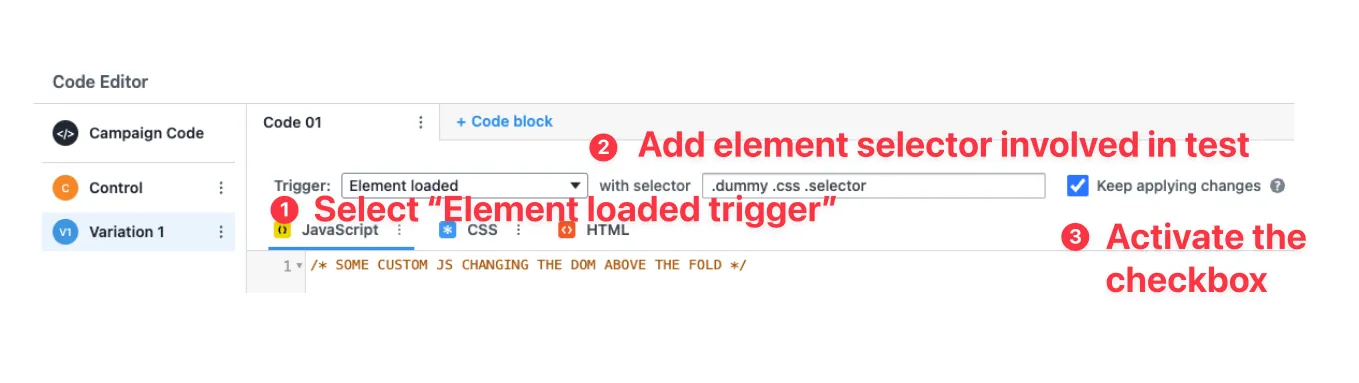

Variations changing DOM nodes above the fold

If a test variation uses code blocks with custom JavaScript to change DOM nodes "above the fold" (in the initial viewport), these settings will ensure compatibility with Speed Kit:

- Use “Element loaded” as the trigger for the code block and use a selector for the element you want to change.

- Check the “Keep applying changes” checkbox. Note that this can result in the JS code being executed multiple times. These settings are a good practice in general as they help prevent flicker, even without Speed Kit.

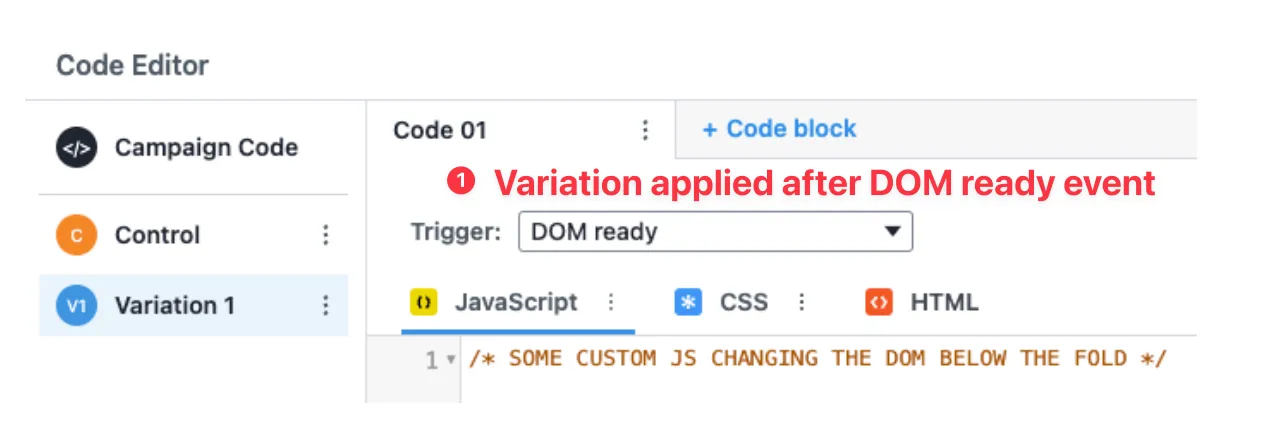

Variations changing DOM nodes below the fold

If a test variation uses code blocks with custom JavaScript to change DOM nodes "below the fold" (only visible after scrolling or interaction), the settings are even simpler.

Just use “DOM ready” as the trigger for the code block. This will make sure the variation is applied after Speed Kit has finished its execution. For below-the-fold content, there is no risk of flicker, which makes this option safe and simple. The same can be used if the changes target a popup or any other content that is not visible immediately after rendering the page.

Best Practices for High-Performance A/B Testing

Beyond Speed Kit's automated solutions, how you plan and execute your A/B tests has a major impact on performance. We recommend the following best practices to all customers.

Strategic Test Management

Acknowledge the Strategic Trade-Off

Every A/B test represents a trade-off. It introduces a performance cost that can negatively impact user experience and conversions, which must be weighed against the potential conversion lift from a successful experiment. It is critical to recognize that running a test is not free; it comes with a real performance cost. Therefore, every test should be a deliberate business decision.

Focus on High-Impact Opportunities

To maximize your return on this "performance investment," use your analytics data to identify the biggest drop-off points in your customer funnel. Concentrate your testing efforts on these high-impact areas where improvements can lead to the most significant business gains. Before launching a test, critically evaluate its potential. You can even discuss your hypothesis with an AI model to gauge the potential uplift. If the projected gain is not substantial, the performance cost of running the test may outweigh the potential benefits.

Consider Traffic and Test Duration

Be cautious when planning tests for pages with low traffic. To achieve a statistically significant result, a test on a low-traffic page may need to run for weeks or even months. This means accepting a performance degradation for a prolonged period for a potentially minor uplift. Prioritize tests on pages where you can get clear results quickly.

Limit Concurrent Tests

Avoid running too many experiments at once. Beyond the performance impact of increased JavaScript payload and complexity, running multiple tests on the same page can make it difficult to get a clear result. The tests can influence each other, which skews the data and makes it hard to isolate the true impact of any single change. Prioritize your tests based on potential impact and run fewer, more meaningful experiments.

Conduct Quarterly Audits

Schedule a regular review (e.g., quarterly) of all third-party scripts, including A/B testing tools. Remove any tools or scripts that are no longer in use to prevent performance degradation from "ghost scripts."

Performance-Aware Test Design

Do Not Test the LCP Element

This is a critical rule. Using a client-side A/B test to modify your Largest Contentful Paint element (e.g., the main hero banner or product image) will almost certainly harm your LCP score. Any changes to this critical element should be tested server-side if possible.

Favor Server-Side Testing When Possible

For long-running tests or experiments on critical user flows (like checkout), we strongly recommend using a server-side A/B testing approach. While it requires more initial setup, it completely bypasses the client-side performance penalties and provides a faster, more stable experience for your users.